Abstract

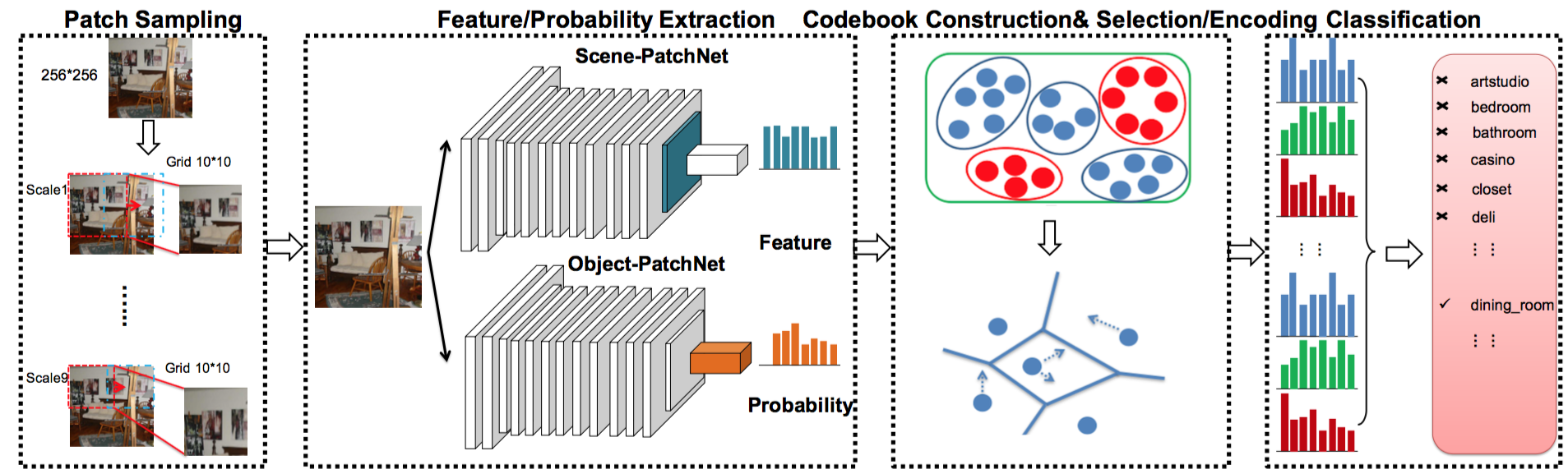

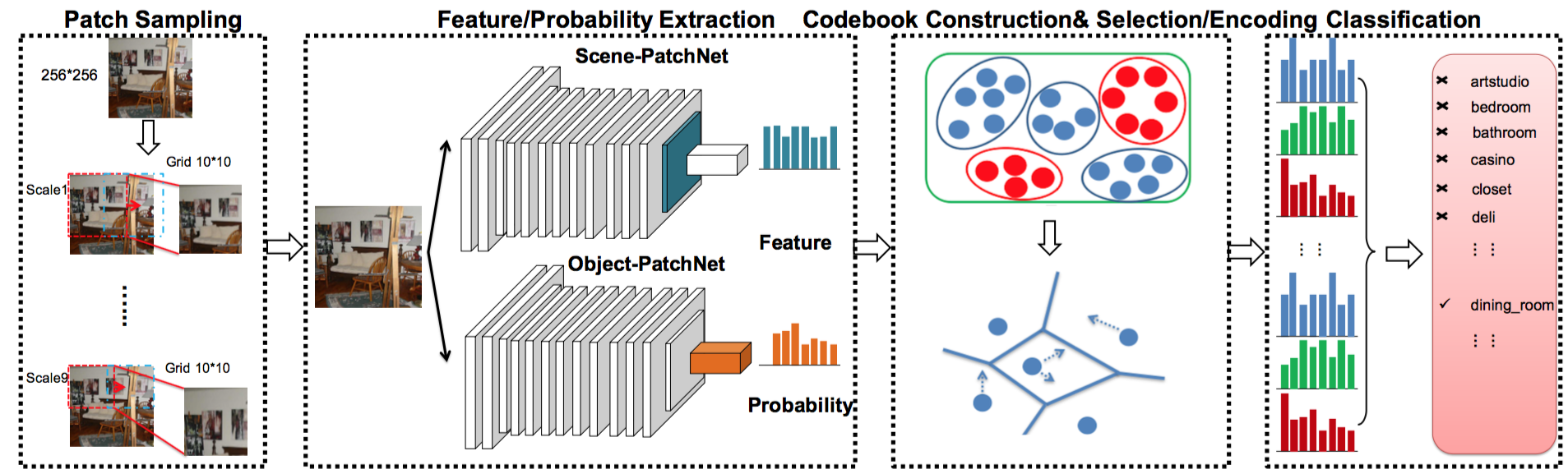

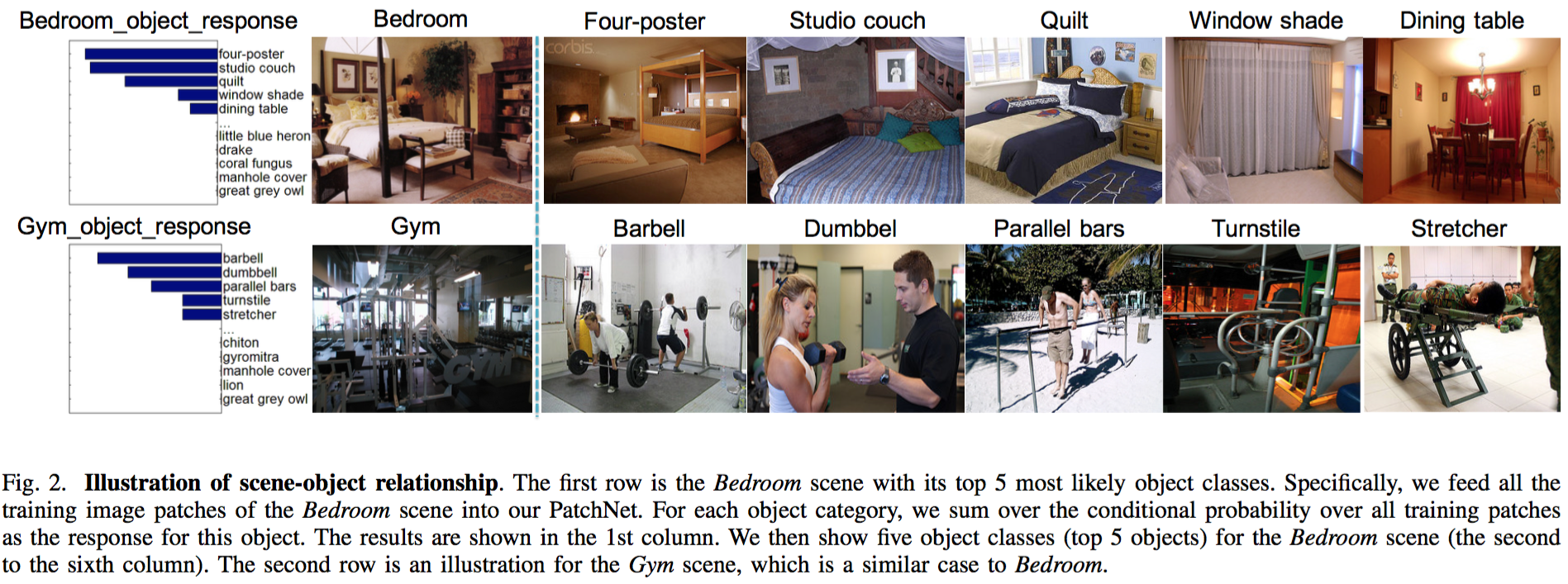

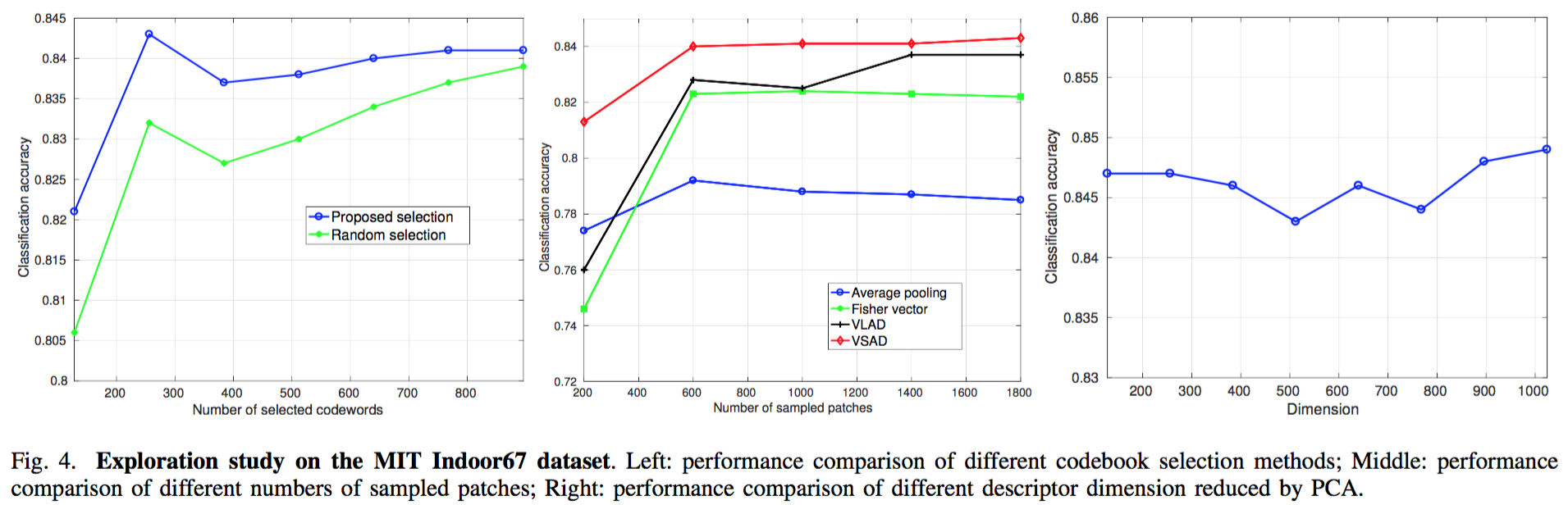

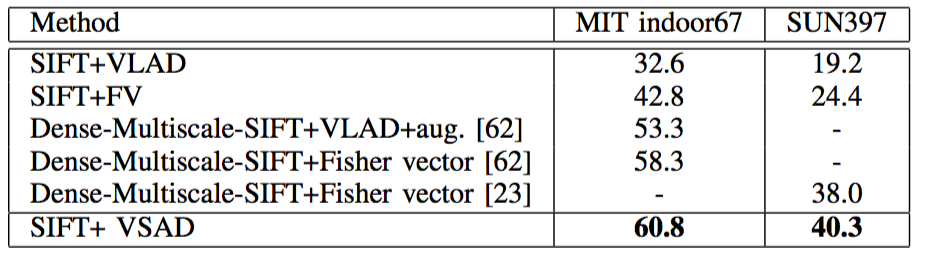

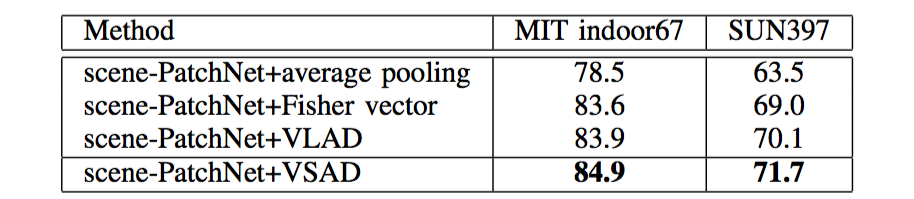

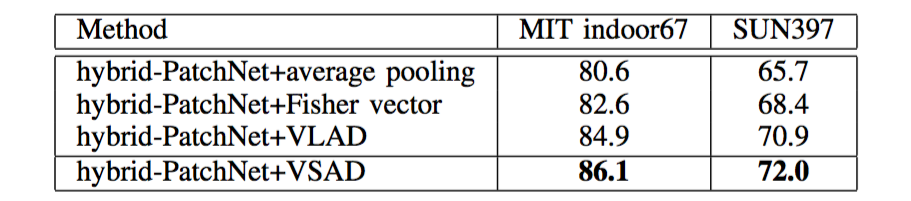

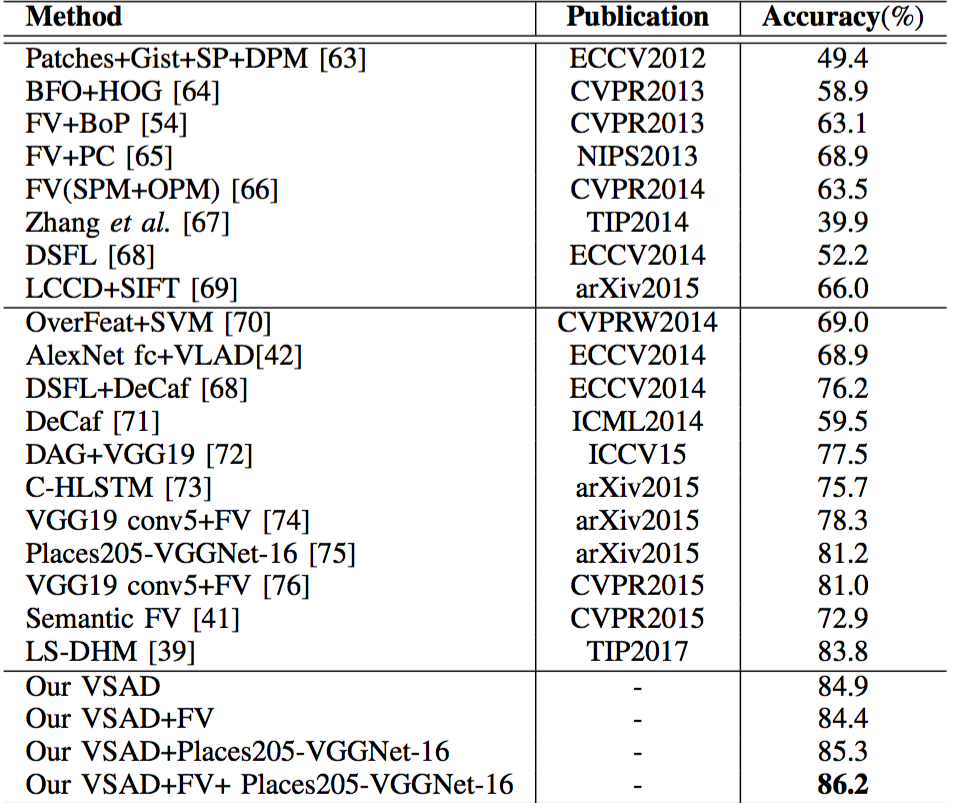

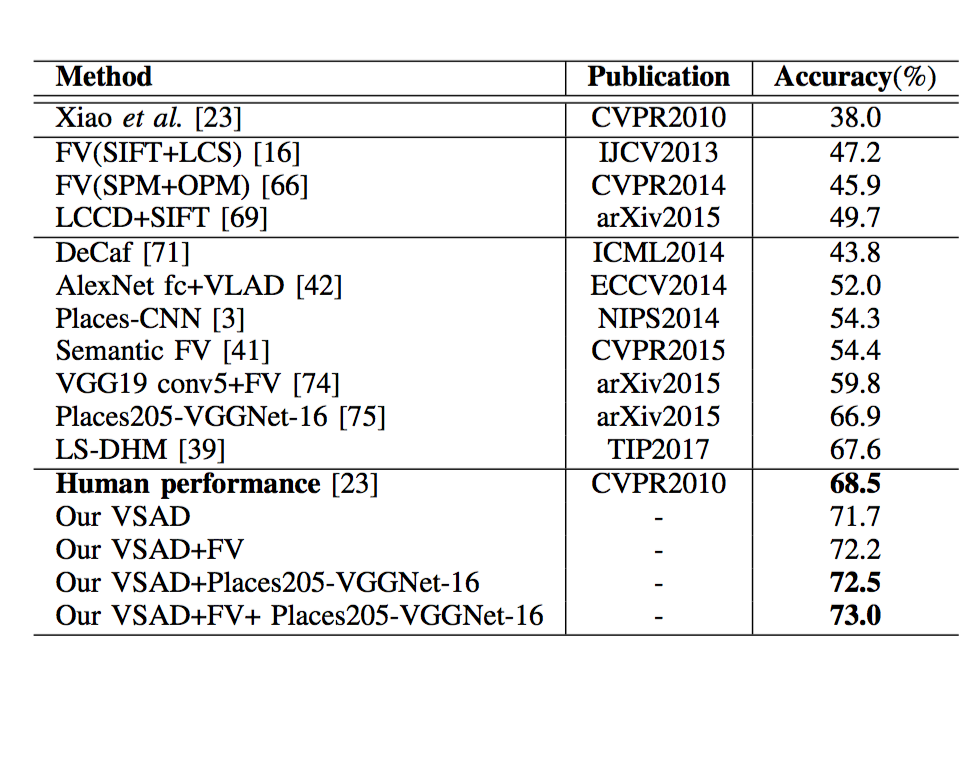

Conventional feature encoding scheme (e.g., Fisher vector) with local descriptors (e.g., SIFT) and recent deep convolutional neural networks (CNNs) are two classes of successful methods for image recognition. In this paper, we propose a hybrid representation, which leverages the great discriminative capacity of CNNs and the simplicity of descriptor encoding schema for image recognition, with a focus on scene recognition. To this end, we make three main contributions from the following aspects. First, we propose a patch-level and end-to-end architecture to model the appearance of local patches, called as PatchNet. PatchNet is essentially a customized network trained in a weakly supervised manner, which uses the image-level supervision to guide the patch-level feature extraction. Second, we present a hybrid visual representation, called as VSAD, by utilizing the robust feature representations of PatchNet to describe local patches and exploiting the semantic probabilities of PatchNet to aggregate these local patches into a global representation. Third, based on our VSAD representation, we propose a state-of-the-art scene recognition approach, which achieves excellent performance on two standard benchmarks: MIT Indoor67 (86.2%) and SUN397 (73.0%).